ClairVoyan Detect & Avoid

Seeing the sky, Securing the flight!

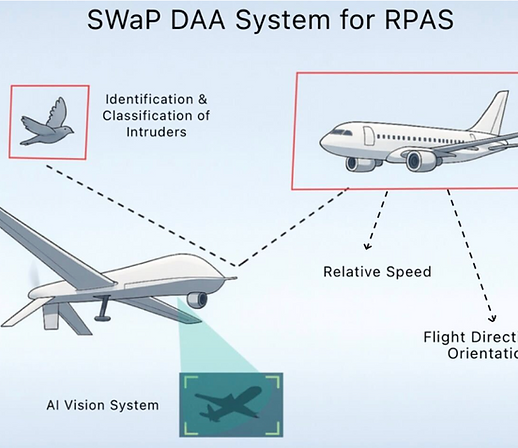

Every flight environment is dynamic and unpredictable. Safe and reliable navigation is essential for every aircraft and for small remotely piloted aircraft systems (RPAS), it’s more than a requirement, it’s a challenge. Advanced Detect and Avoid systems (DAA) are fundamental for autonomous operation in controlled airspace. This research aims to design a new generation of DAA technology that meets SWaP constraints (Size, Weight and Power) while delivering advanced capabilities.

01

Project Overview

.png)

ClairVoyan DAA is a next-generation vision based Detect and Avoid system (DAA) designed to enable safe integration of Remotely Piloted Aircraft Systems (RPAS) into controlled airspace. While ADS-B and transponders allow tracking of cooperative aircraft, non-cooperative aircraft, remain undetectable with existing lightweight systems. This project develops a low SWaP camera module that combines classical computer vision and advanced machine learning to detect and classify aircraft at long range, estimate range from monocular imagery, and run in real time on Hover’s 250 g CNN-enabled DAA computer. The outcome is an embedded, certifiable, camera based detection pipeline that strengthens the safety foundation for future RPAS and Advanced Aerial Mobility operations.

02

Significance

Safe RPAS integration requires the ability to “see and avoid” non-cooperative aircraft, a function that today’s lightweight DAA systems cannot reliably provide. ClairVoyan directly addresses this industry-wide gap by delivering a camera-based solution that is small enough, fast enough, and accurate enough to support deployment on compact aviation platforms. The system enhances situational awareness, reduces mid-air collision risk, and enables new operational capabilities including BVLOS missions, wildfire response, cargo transport, and defense applications. By fusing AI with classical vision on an ultra-efficient airborne computer, ClairVoyan represents a major step toward certifiable, industry-ready AI safety systems for next-generation airspace.

03

Challenges

Non-cooperative aircraft detection remains a hard open problem due to:

-

Lack of electronic conspicuity: small aircraft often do not carry ADS-B or transponders.

-

Range estimation limitations: monocular cameras cannot directly measure distance, yet long-range accuracy is essential for safe DAA maneuvering.

-

Real-time computation on low-SWaP hardware: embedded processors must execute AI pipelines under strict size, weight, and power limits.

-

Environmental variability: lighting, weather, cluttered backgrounds, and nighttime operations degrade camera performance.

-

Certification constraints: aviation requires predictable, safe, and interpretable detection behavior under RTCA DO-387 guidelines.

Together, these challenges make vision-based DAA one of the most difficult yet essential components of autonomous aviation safety.

04

Research Approach

ClairVoyan integrates four coordinated research pillars:

-

Vision-Based Aircraft Detection:

-

Develop classical vision algorithms (edge extraction, frame differencing, motion cues).

-

Train ML models for long range object detection and classification in daylight and nighttime conditions.

-

Use CAD-based synthetic rendering to expand training sets and ensure robustness.

-

-

Monocular Range Estimation & Tracking:

-

Explore geometry-based depth cues, image-scale dynamics, and optical-flow-based range trends.

-

Fuse camera outputs with radar, ADS-B, and GNSS to improve range accuracy and reduce false alerts.

-

-

Embedded AI Optimization:

-

Compress and prune neural networks while preserving accuracy.

-

Reduce bandwidth and computational load through frame throttling and optimized feature extraction.

-

Achieve real-time performance on Hover’s 250 g AI DAA computer.

-

-

System Integration & Evaluation:

-

Integrate the full pipeline on Hover’s hardware with Thales’ cooperative aircraft interrogator.

-

Conduct SITL simulations, nighttime/daytime flight scenarios, and benchmark detection performance.

-

Prepare alignment with RTCA certification frameworks for transition to operational flight testing.

-

.png)

Publications

-

In progress

Ackhnowledgements

This work was supported by the Washington State Joint Center for Aerospace Technology Innovation (JCATI). We gratefully acknowledge JCATI’s commitment to advancing aerospace research and enabling collaboration between academia and industry. Their support made it possible for our team to integrate advanced computer vision, machine learning, and embedded sensing technologies into a next generation Detect and Avoid system for Remotely Piloted Aircraft Systems. We also thank Hover, Inc. for their technical partnership, hardware support, and guidance throughout this project.

Gallery

.png)